Why LXD?

You may’ve gathered that I rather like LXD in home labs and others. So let’s delve deeper into why that is…

What even is containerization? Link to heading

To begin with we need to clear the air on what we are talking about. What even is containerization? Isn’t that just putting stuff in containers on large cargo ships? It’s actually quite similar - computer scientists aren’t the most creative when it comes to naming so they often steal real world terms.

Back in “ye olde days” shipping goods was actually quite the dynamic affair. You would get all sorts of cargo - raw materials of various sizes and weights, furniture, animals, barrels of various liquids etc. You know how when you go on a road trip with a large family or friends and you get an assortment of random luggage and you have to play around to get it to fit in the trunk of the car? Just scale that up by a factor of 10 000. This is precisely why loading and unloading cargo would take a long time, where the ship and crew were stuck at port wasting time and money for everybody involved, not to mention the large risk of accidents. What sucks even more is that the goods would then need to be moved on land so you would have to load it all over again on trucks, trains, horse carts or whatever you would use to get it to the end consumer, so ports were also quite large and noisy.

At some point in the mid to late 20th century through a convoluted process which I won’t be describing in depth humanity had a briliant idea - What if we put our goods into a box and then put that box in the ship? That way our box can be loaded onto any form of transport without having to spend too much time figuring out where it goes, thus saving us time and woes. Here’s the kicker, because it contains cargo, let’s call it a container.

Now that we know what real world containers are, let’s see what they are in a computing sense. To quote the wiki definition:

" … Containerization is operating system-level virtualization or application-level virtualization over multiple network resources so that software applications can run in isolated user spaces called containers in any cloud or non-cloud environment, regardless of type or vendor."

And to put that into human speak, it’s a way for us to package software into some sort of wrapper that is standardized and can be unwrapped on any target machine. We put our program into a sort of light weight standardized Virtual Machine which has all of the dependencies required - analogous to the real life container, and run it on our destination host - in real life this will be the actual location where our goods are needed. I told you computer scientists aren’t that creative when it comes to naming.

So it’s just virtualization right? Link to heading

Humans have been obssesed with partitioning their computers into smaller machines for quite a while. I believe this started with terminals back in the day - during the course of their development computers became far too powerful for single users to spend all of their resources, leaving idle compute cycles. To adress this you would sort of timeshare them by having them act as a mainframe and have dumber terminals where users would actually input what they want.

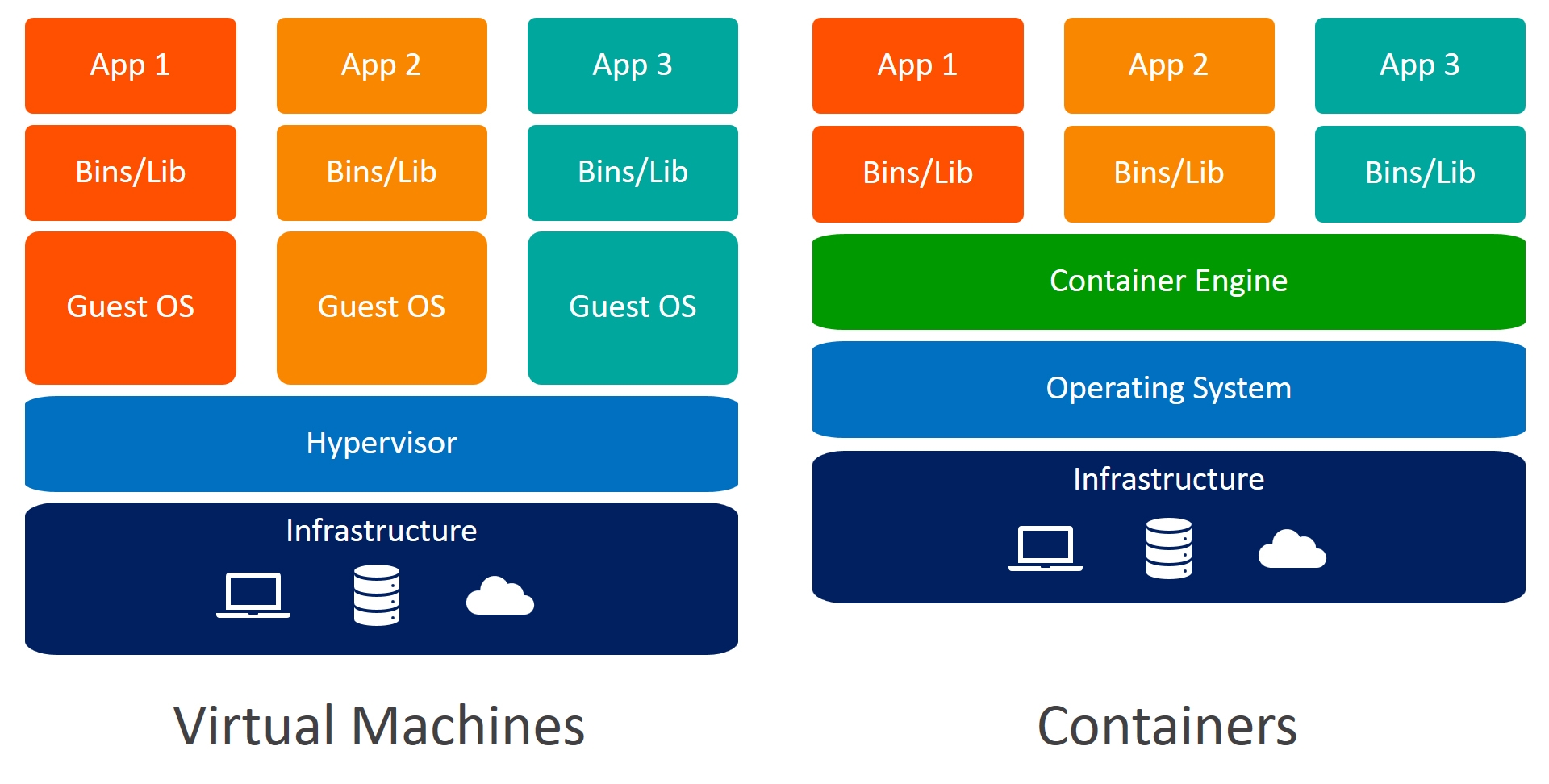

To take the concept to the next level we allow one computer (the host) to be split up into individual smaller computers (Virtual Machines/VMs), through what is called a hypervisor. What we loose in terms of raw performance for each VM we gain in actual separation between them. Each of our VMs doesn’t know about the others and can only talk to the others through networking, for all intents and purposes it is a seperate computer with a BIOS and everything.

So having a seperate computer is great, we get the flexibility of running a unique OS with unique services, but there’s a problem, we need to actually set it up and administer it - create the VM, install an OS,configure networking, install the software that we want to run on it, configure that etc. While this can be alleviated somewhat by templating the image we use to install the OS, use some sort of infrastructure automation (like Puppet or Ansible) it still brings overhead. Especially with the new paradigm of taking a programatic aproach to system engineering we need some way to bypass all of this.

If our software lives in it’s own little container as discussed previously, then we can simply configure that and not worry much about the rest. Containers are a layer of abstraction that do require an OS though, the main reason they are so lightweight is because they piggyback much of the required system from the underlying OS, unlike VMs which are totaly seperate. The below picture shows you that some binaries are shared accross host and container:

This does mean that what you get in performance benefits you trade back seperation - moving that slider from the begging back in the other direction a bit. Also you get the ability for developers to only care about their own application being shipped, while the rest is handed back to the host.

Hopefully you can now see that these two technologies solve two different problems. The history of their development is seperate, so let’s explore how we got to where we are today.

History of containerization Link to heading

A brief disclaimer, this is the history as to what I was able to dig up and presented how I understand it. For any factual or other errors don’t hesitate to let me know.

Pre-history Link to heading

Like a great many things in modern day computing, this paradigm has it’s roots in the day of the ancients, mainly the 1970s and Unix. Back then using arcane magic was the root of containerization - mainly chroot-ing (badum-ts). The wise sages of the day had to solve a few problems, how do we create a container for our software, users and files so that it can’t access other’s data or files while maintaining core functionality? Because everything in Unix is a file you essentially need to quarantine disc space. Enter chroot, whereby you lie to a process at an OS level, changing the root of the file system just for it. In human speak this means that you create an empty folder with links to the binaries and files you want the process to access, then tell it “Here’s the root of the filesystem”.

Chrooting was so popular that it continued to evolve through the years, though a bit under the radar.

Jails Link to heading

Unix spawned many children throughout the years, one of which was the Berkley Software Distribution or BSD. One use case was to create a honeypot in a chrooted system where you would monitor the hacker, in essence putting them in a sort of jail. The BSD community continued to expand chroot, now dubbing it Jails and extending it to be able to have IP addresses and networking assigned to it. The main flavor of BSD that pioneered this concept was FreeBSD.

“Jails improve on the concept of the traditional chroot environment in several ways. In a traditional chroot environment, processes are only limited in the part of the file system they can access. The rest of the system resources, system users, running processes, and the networking subsystem are shared by the chrooted processes and the processes of the host system. Jails expand this model by virtualizing access to the file system, the set of users, and the networking subsystem. More fine-grained controls are available for tuning the access of a jailed environment. Jails can be considered as a type of operating system-level virtualization."

Already starting to sound a lot like containers.

Adoption by the Linux community Link to heading

Indeed the first containers came about as the Linux community wanted to port Jails. There was nothing built into the kernel as of yet, so third party solutions had to be relied on. In the early 2000s Virtuozzo provided just that, unfortunately only available commercially. Though not OSS at the time, they would eventually release their code under OpenVZ in 2005 and although popular it never seemed to have the full featureset of the commercial version.. I believe it was Virtuozzo that first started calling them containers.

In parallel Linux-VServer came along to provide an open source approach to the “Jails on Linux” problem. Though now it seems to be defunct it’s important in a historical context, at least for the way they dubbed containers - Virtual Private Servers.

Fast forward a bit and development was speeding up, with the advent of “Process Containers” by Google engineers. This allowed the limitation of system resources to specific processes, essentially telling them exactly how much memory, CPU, disk, I/O or other system resource they could access. In 2007 this was adopted into the Linux kernel, though now renamed to Cgroups - the kernel used the term containers in other contexts, which would lead to confusion. Namespaces were also incorporated which would segregate the available resources and only show them to the process that is in it’s own individual namespace.

Now we have a way to seperate resources and also have them be visible only to the process that is supposed to see them. The time was ripe to put the two together and what came out of it in 2008 with LXC was something that we can recognize as a modern-day container engine. It would allow you to spool a machine running a distro of your choice while controlling it’s resource allocation and everything we’ve come to expect. Mind you Virtuozzo could also do the same but their commercial first approach meant limited adoption, where as this was baked into the kernel. Thus it started garnering popularity and was used by a small upstart project you might’ve heard of…

The rise of Docker Link to heading

Docker started out their container engine by actually using LXC as a runtime, though they eventually phased it out and now use their own engine that uses the cgroups and namespacing kernel features. The team behind the big-blue-whale differentiated by focusing more on shipping individual applications in their containers and customizing the image to that particular program, rather then providing more agnostic container images. Individual instances would still share kernel and networking features but they would be as bare bones as possible in terms of what is inside them.

Anyone who has actually tried to enter a Docker container that is running say some Node.JS application would know that sometimes there isn’t even any shell shipped other than the Bourne shell (sh), you don’t even have log directories most of the time, rather relying on the host itself to capture output. This stands in stark contrast to standard LXC where containers are like mini-versions of full flegged machines, where they have syslog and most of everything you need.

The Docker approach was also to offer an easy way to build images and even store them on Docker hub where anybody could download them. No longer for sys-admin types, containers could be used by the masses and use them they did. Developers have long struggled with having a unified install base, the “Works on my machine” mantra is great, but we can’t ship your machine, well not unless we create a container image of it and actually distribute that… That is exactly what happened and developers the world over were jubilant. No longer would they have to troubleshoot bugs or edge cases, just put an image out there with your software, it already has all it needs and all is right in the world!

All of this meant insane popularity for Docker amongst developers and them moving many of their projects to a containerized output or at least adding an image as a download option. Not to mention the fact you could split your large app into smaller ones that run on containers and talk to eachother, moving from one monolithic service to a micro-service, something which I’ll gripe about at a later date. Unfortunately, giving too much power to any organization, especially a commercial one is risky and as we have seen in some examples we need to be able to have OSS alternatives to everything which we can host ourselves.

To summarize, Docker focuses on application containers, which is great, we can cluster it and orchestrate it with Kubernetes, but we’re still out there looking for something that offers more of an old-school system container…

LXD Link to heading

Technology marches ever forward and while we still needed system containers we needed a better way of managing what the Linux kernel provided, as nobody likes to remember clunky commands and arcanery. To that end LXD was developed by Canonical and written in Golang to offer an easier wrapper for using LXC. Appart from just quality of life in every-day use, it also added a bunch of new features througout the years, quick bootstrapping of the machine itself, multiple storage backends, clusterization, high-availability for containers and even the ability to manage VMs. To that end LXD is more of a manager for LXC and QEMU instances rather than a pure container engine or hypervisor. One that works all to well I might add!

Pros of LXD Link to heading

Some features I’ve already mentioned but to list them off:

- Clustering, this allows high availability and scaling for instances.

- Minimal setup times with their bootstrapping scripts

- LXC backed, so it offers system containers

- VM support with QEMU

- Able to create multiple projects and profiles to segregate the use of resources

- Awesome dev and community behind it, answering all of my idiotic questions throuhout the years!

and many more! The team is constantly listening to feedback so you can always put in a suggestion up for discussion. It seems they are doing a good job as the LXC project itself is now under Canonical as well.)

Cons of LXD Link to heading

I don’t want to sound like an advertisement and there are some aspects of this project I actually do not like. Some of them include:

- The insistance on using Snap. While it can be a neat little package manager, it doesn’t let one hold-back an upgrade for more than X amount of time. This has caused me some headaches throughout the years whenone cluster member fails to upgrade due to a solar flare and we get hung instances… I suppose this clinging on to Snap comes from Canonical

- The documentation can be a bit lacluster

- The Canonical integration is a bit of a problem as they tend to focus on using their particular solutions a bit too much at times.

That said, I still love the project and these are addresable problems.

In conclusion Link to heading

LXD is sort of like the Kubernetes for system containers. It gives us endless options when building our infrastructure and I use it extensively at home. I hope you give it a shot as well!